Choosing your Compute Add-on

Choosing the right Compute Add-on for your vector workload.

You have two options for scaling your vector workload:

- Increase the size of your database. This guide will help you choose the right size for your workload.

- Spread your workload across multiple databases. You can find more details about this approach in Engineering for Scale.

Dimensionality#

The number of dimensions in your embeddings is the most important factor in choosing the right Compute Add-on. In general, the lower the dimensionality the better the performance. We've provided guidance for some of the more common embedding dimensions below. For each benchmark, we used Vecs to create a collection, upload the embeddings to a single table, and create an inner-product index for the embedding column. We then ran a series of queries to measure the performance of different compute add-ons:

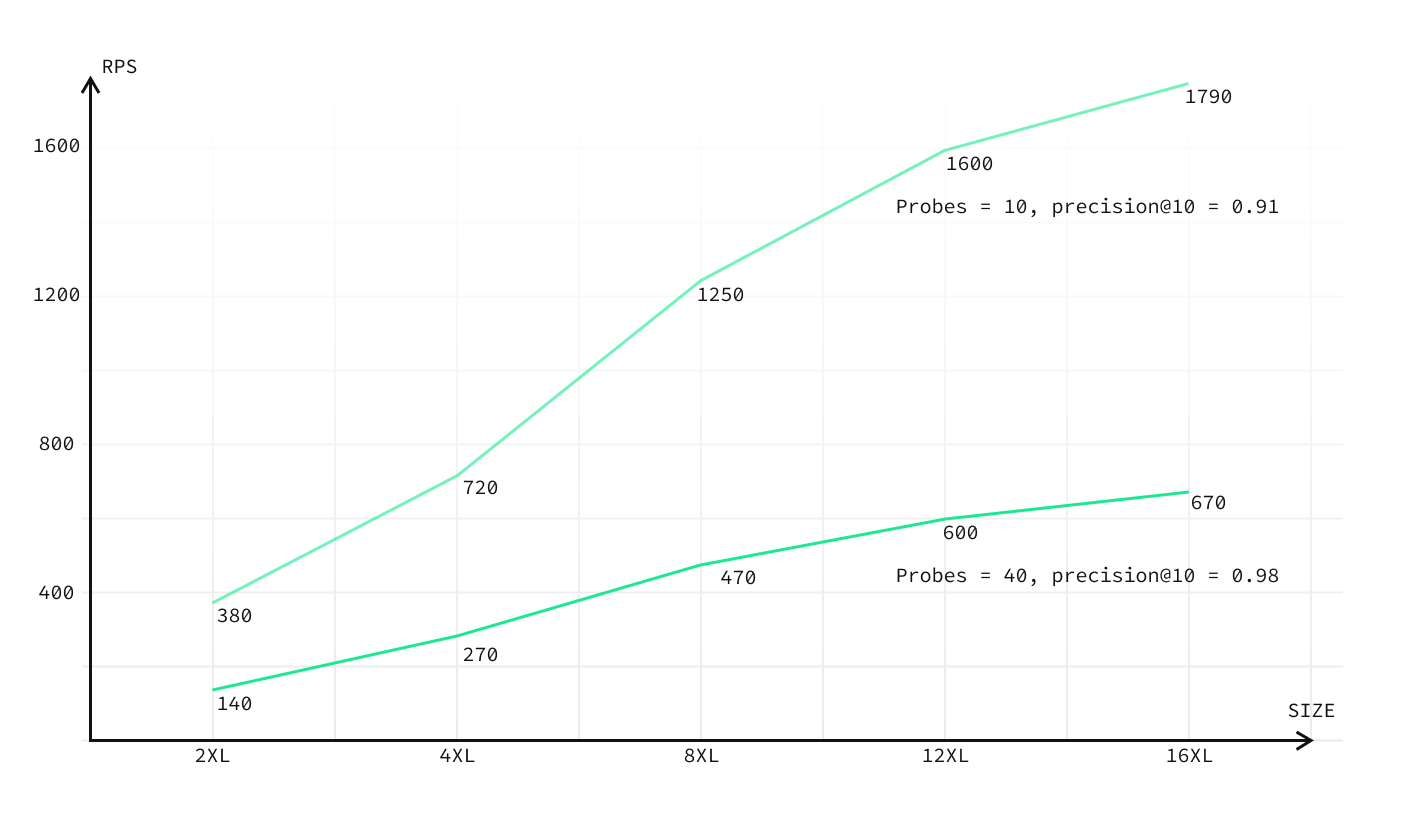

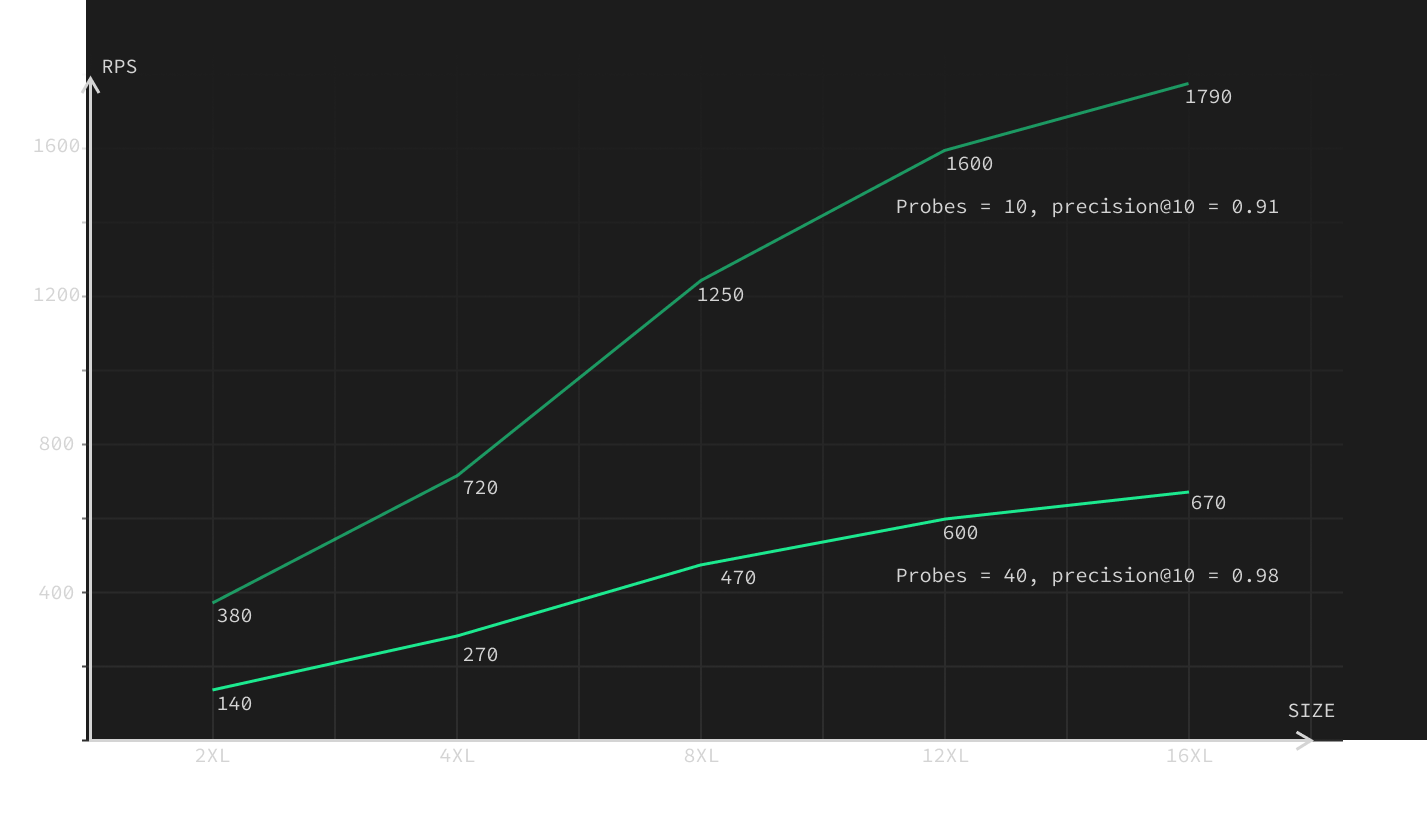

1536 Dimensions#

This benchmark uses the dbpedia-entities-openai-1M dataset, which contains 1,000,000 embeddings of text. Each embedding is 1536 dimensions created with the OpenAI Embeddings API.

| Plan | Vectors | Lists | RPS | Latency Mean | Latency p95 | RAM Usage | RAM |

|---|---|---|---|---|---|---|---|

| Free | 20,000 | 40 | 135 | 0.372 sec | 0.412 sec | 1 GB + 200 Mb Swap | 1 GB |

| Small | 50,000 | 100 | 140 | 0.357 sec | 0.398 sec | 1.8 GB | 2 GB |

| Medium | 100,000 | 200 | 130 | 0.383 sec | 0.446 sec | 3.7 GB | 4 GB |

| Large | 250,000 | 500 | 130 | 0.378 sec | 0.434 sec | 7 GB | 8 GB |

| XL | 500,000 | 1000 | 235 | 0.213 sec | 0.271 sec | 13.5 GB | 16 GB |

| 2XL | 1,000,000 | 2000 | 380 | 0.133 sec | 0.236 sec | 30 GB | 32 GB |

| 4XL | 1,000,000 | 2000 | 720 | 0.068 sec | 0.120 sec | 35 GB | 64 GB |

| 8XL | 1,000,000 | 2000 | 1250 | 0.039 sec | 0.066 sec | 38 GB | 128 GB |

| 12XL | 1,000,000 | 2000 | 1600 | 0.030 sec | 0.052 sec | 41 GB | 192 GB |

| 16XL | 1,000,000 | 2000 | 1790 | 0.029 sec | 0.051 sec | 45 GB | 256 GB |

For 1,000,000 vectors 10 probes results to precision of 0.91. And for 500,000 vectors and below 10 probes results to precision in the range of 0.95 - 0.99. To increase precision, you need to increase the number of probes.

960 Dimensions#

This benchmark uses the gist-960-angular dataset, which contains 1,000,000 embeddings of images. Each embedding is 960 dimensions.

| Plan | Vectors | Lists | RPS | Latency Mean | Latency p95 | RAM Usage | RAM |

|---|---|---|---|---|---|---|---|

| Free | 30,000 | 30 | 75 | 0.065 sec | 0.088 sec | 1 GB + 100 Mb Swap | 1 GB |

| Small | 100,000 | 100 | 78 | 0.064 sec | 0.092 sec | 1.8 GB | 2 GB |

| Medium | 250,000 | 250 | 58 | 0.085 sec | 0.129 sec | 3.2 GB | 4 GB |

| Large | 500,000 | 500 | 55 | 0.088 sec | 0.140 sec | 5 GB | 8 GB |

| XL | 1,000,000 | 1000 | 110 | 0.046 sec | 0.070 sec | 14 GB | 16 GB |

| 2XL | 1,000,000 | 1000 | 235 | 0.083 sec | 0.136 sec | 10 GB | 32 GB |

| 4XL | 1,000,000 | 1000 | 420 | 0.071 sec | 0.106 sec | 11 GB | 64 GB |

| 8XL | 1,000,000 | 1000 | 815 | 0.072 sec | 0.106 sec | 13 GB | 128 GB |

| 12XL | 1,000,000 | 1000 | 1150 | 0.052 sec | 0.078 sec | 15.5 GB | 192 GB |

| 16XL | 1,000,000 | 1000 | 1345 | 0.072 sec | 0.106 sec | 17.5 GB | 256 GB |

512 Dimensions#

This benchmark uses the GloVe Reddit comments dataset, which contains 1,623,397 embeddings of text. Each embedding is 512 dimensions. Random vectors were generated for queries.

| Plan | Vectors | Lists | RPS | Latency Mean | Latency p95 | RAM Usage | RAM |

|---|---|---|---|---|---|---|---|

| Free | 100,000 | 100 | 250 | 0.395 sec | 0.432 sec | 1 GB + 300 Mb Swap | 1 GB |

| Small | 250,000 | 250 | 440 | 0.223 sec | 0.250 sec | 2 GB + 200 Mb Swap | 2 GB |

| Medium | 500,000 | 500 | 425 | 0.116 sec | 0.143 sec | 3.7 GB | 4 GB |

| Large | 1,000,000 | 1000 | 515 | 0.096 sec | 0.116 sec | 7.5 GB | 8 GB |

| XL | 1,623,397 | 1275 | 465 | 0.212 sec | 0.272 sec | 14 GB | 16 GB |

| 2XL | 1,623,397 | 1275 | 1400 | 0.061 sec | 0.075 sec | 22 GB | 32 GB |

| 4XL | 1,623,397 | 1275 | 1800 | 0.027 sec | 0.043 sec | 20 GB | 64 GB |

| 8XL | 1,623,397 | 1275 | 2850 | 0.032 sec | 0.049 sec | 21 GB | 128 GB |

| 12XL | 1,623,397 | 1275 | 3700 | 0.020 sec | 0.036 sec | 26 GB | 192 GB |

| 16XL | 1,623,397 | 1275 | 3700 | 0.025 sec | 0.042 sec | 29 GB | 256 GB |

note

It is possible to upload more vectors to a single table if Memory allows it (for example, 4XL plan and higher for OpenAI embeddings). But it will affect the performance of the queries: RPS will be lower, and latency will be higher. Scaling should be almost linear, but it is recommended to benchmark your workload to find the optimal number of vectors per table and per database instance.

Performance tips#

There are various ways to improve your pgvector performance. Here are some tips:

Pre-warming your database#

It's useful to execute a few thousand “warm-up” queries before going into production. This helps help with RAM utilization. This can also help to determine that you've selected the right instance size for your workload.

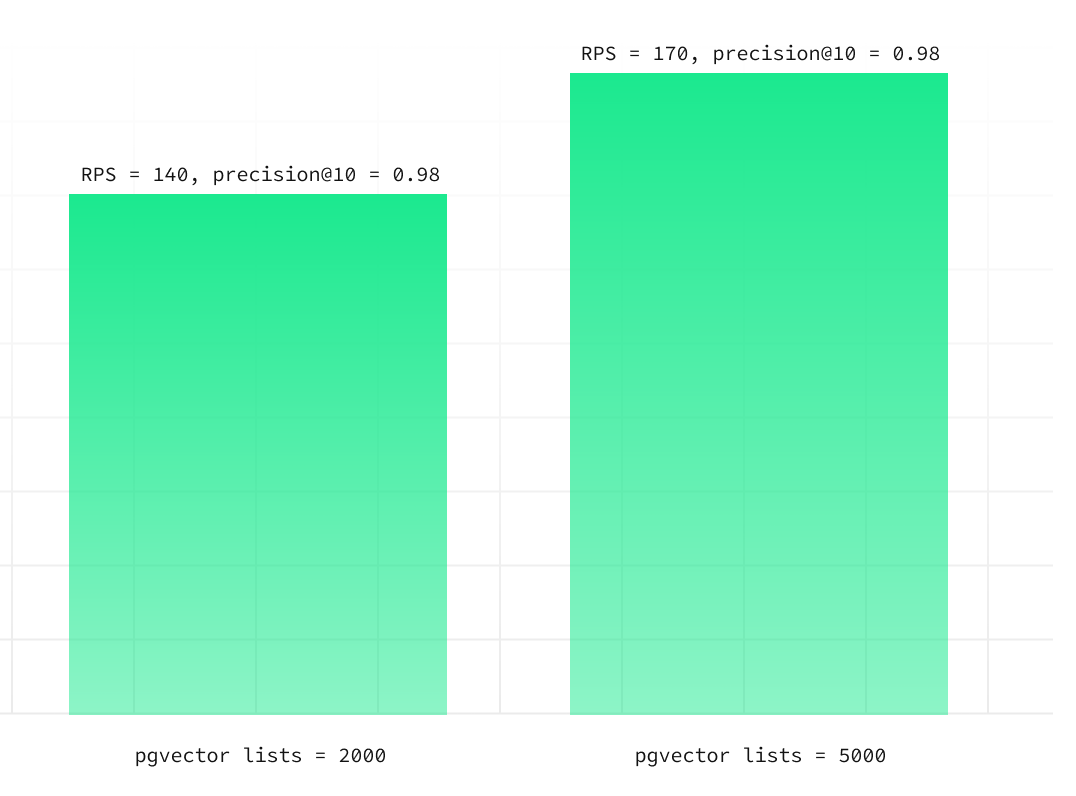

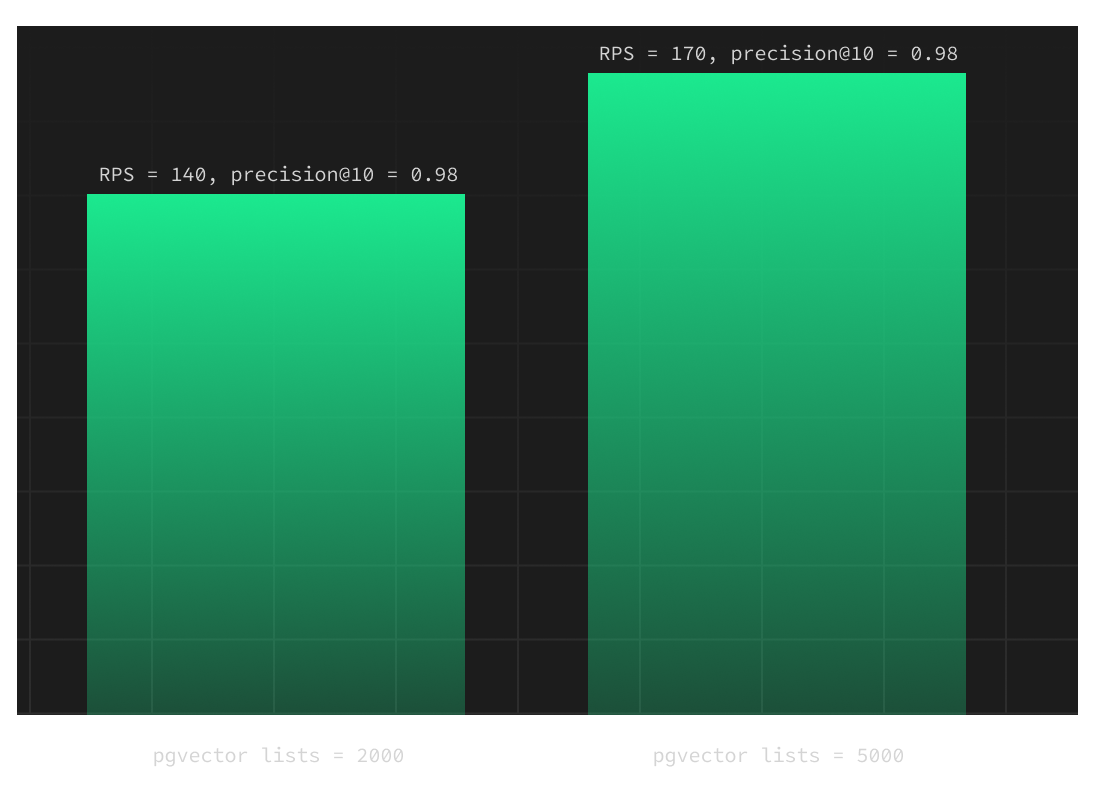

Increase the number of lists#

You can increase the Requests per Second by increasing the number of lists. This also has an important caveat: building the index takes longer with more lists.

Check out more tips and the complete step-by-step guide in Going to Production for AI applications.

Benchmark Methodology#

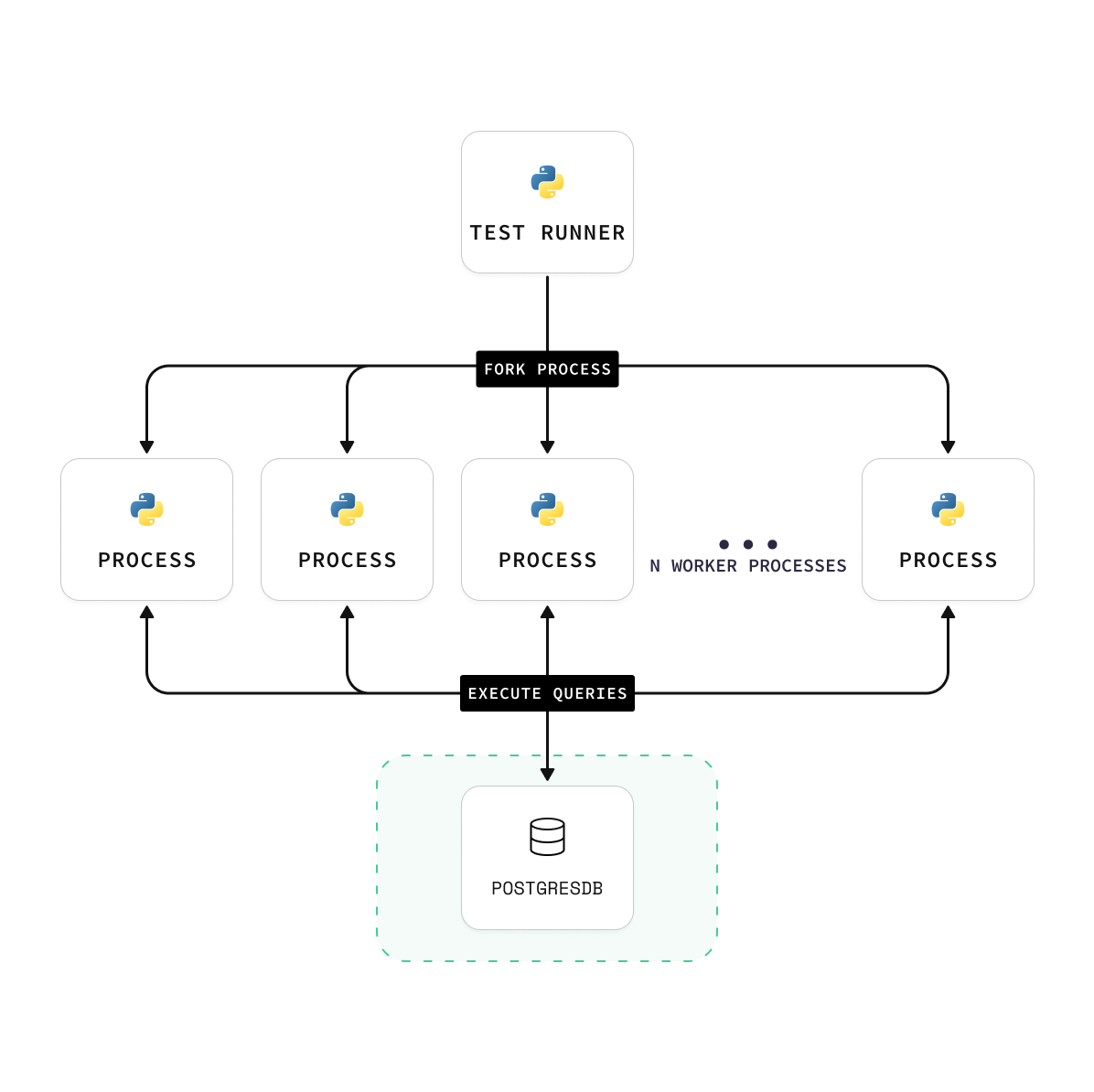

We follow techniques outlined in the ANN Benchmarks methodology. A Python test runner is responsible for uploading the data, creating the index, and running the queries. The pgvector engine is implemented using vecs, a Python client for pgvector.

Each test is run for a minimum of 30-40 minutes. They include a series of experiments executed at different concurrency levels to measure the engine's performance under different load types. The results are then averaged.

As a general recommendation, we suggest using a concurrency level of 5 or more for most workloads and 30 or more for high-load workloads.